The Art of Saying 'I Don’t Know

Data scientists are shaped through asking the right questions. Many of the things in the world are easy explainable. However, many are not.

One of my favorite fields within analytics is forecasting. This is because its beautiful in my ways - forecasts can never be perfect, they are always full of uncertainty and the art is to bring the forecasts as close as possible to the future events. Despite using heavy computations and mathematics, it remains a field which combines calculations along with adjusting for the uncertain events in the world. Uncertainty is sometimes anticipated, but sometimes the market dynamics and world events change drastically. That is where the real analyst mindset comes to play: combining the scope of error along with human judgement and knowledge. Good data professionals would always learn from mistakes, accept the opportunities to improve and bring innovation to life.

I have realized that in addition to the hard skills of a data professional - attitude to handle difficult situations matter a lot. 'Know it all attitude' can sometimes decrease trust. Let me share an example with you.

There is a data professional working with me currently who has a really good way of operating. I ask him a lot of questions on data analysis prior to the stakeholder meetings by anticipating those questions for the upcoming meetings. He is able to answer most of them as he does his home work really well, but he does not shy away from saying - "I don't know - I will get back to you". My confidence boosts up automatically after listening to this. I know how to back the answers I know, to say "I don't know to my stakeholders" and that creates a healthy environment full of humility and trust.

The best part is that this attitude cascades from bottom up rather than top down.

There is an art of saying I don't know - and the ones who master this art go a long way in developing trust and relationship with people.

Why do we need to say I don't know?

Best data professionals that I have come across don't always have an answer, but have the audacity to ask the right questions. There are several reasons that we need to ask deep questions about the insights from the data.

Data is great, but we need to question every aspect of it.

Often, published dashboards and reports are utilized by business to understand retrospective trends. Accordingly, business strategy for the future is shaped through the answers from these reports. However, these reports fail to capture the unobvious trends. For example: a report might show you dip in sales, but might not explicitly answer - why is there a dip in sales, what factors led to that? Did any competitor enter the market, or was it our most recent strategy which led to the decline? Sometimes, a hypothesis is already made up in mind because of the "known unknowns". For ex: business would generally know the uptake of competitor which would lead to this decline. However, there might have been a government policy which we are not aware of. The report is not supposed to capture this, but the analytical culture in the organization should be to ask these questions more and more.. of course, nicely!!

One of my previous mentors used to say - rip your own analysis apart, else I will do it for you.

In my previous consulting organization, one of my mentors used to advice me and my team to rip our frameworks, analytical results and insights. He wanted us to be prepared for the calls with our clients - so much so - that there should be no anticipated question left unanswered, and even if it did, my other mentor very nicely guided me on how to handle the "I don't know" part. This has helped me in so many ways. In situations where there are unstructured problems to be discussed and guided, I have always ripped my methodology apart, part by part to really understand how does this make sense and can there be a better way to do things? The intent is not to reach perfection, but to reach as close as possible to the real answer.

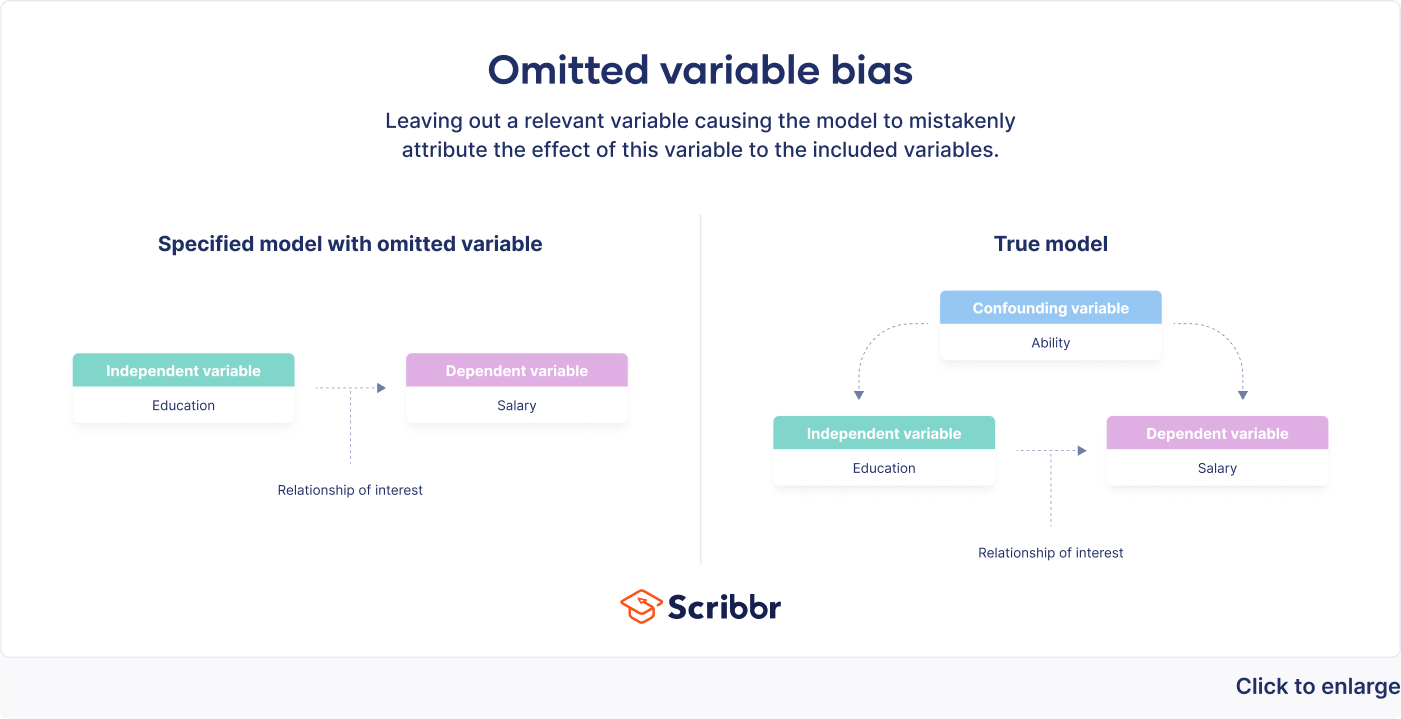

Omitted variable bias is almost everywhere.

Imagine that you are conducting an analysis to identify the impact of the education on the salary of various individuals. As you expect, the salary is directly proportional to education and the data will likely prove this hypothesis. However, if you fail to take into account the work experience of the individuals, then it would show the exact effect incorrectly. This is exactly omitted variable bias. If you fail to account the known or unknown variable in an analysis, the causality does not implicate correlation, or at least does not do it to an accurate effect. This bias is very common in day to day because its much easier to take into account what is visible to the eyes or what is known to us at that point in time.

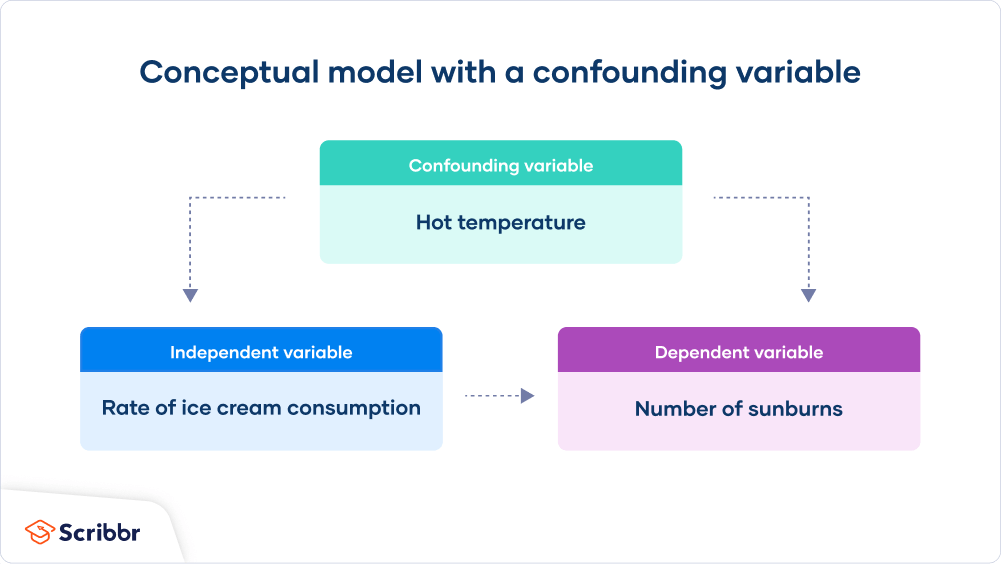

If we understand confounding variables, half the problems can be solved easily.

In December, one of the things that happens in Europe is the increased sales of everyone's favorite – coffee. One research also found out that in December, average weight of an individual increases. Does this mean coffee consumption leads to weight increase? Probably not.

As you might have already related the obvious, coffee sales increases because of the looming winters in the EU. Also, winters leads to psychological and physiological factors that increase an appetite of an individual, often increasing weight. This means there is another variable "weather" which has an impact on both - the "cause" and "effect". In this case, weather is a confounding variable.

Again, confounding variables lead to an underestimation or overestimation of the effect of a variable on another variable.

Rumsfeld says that the “category of unknown unknowns is the most difficult to grasp”.

Known - what we know

Known unknowns - things we know that we don't know

Unknown unknowns - things we don't know that we don't know

Recently, I asked one of my business stakeholders if he thinks that automating classical machine learning using Generative AI would help his function, as his function is involved in a lot of use cases such as customer segmentation.

His response was amazing. He said - "Anurag, I don't know what I don't know". He meant that he is unaware of the possibilities and would love to explore more. This helped me understand why knowing possibilities changes a lot of things. Then, I showed him some use cases in the form of proof of concept and he was amazed. A lot of times, we are unaware of the possibilities during a data analysis, and the only way we reduce this horizon is to know more (explore the possibilities), and try to make the "unknown unknowns" into "known unknowns". This helps reduce the noise from our analysis and we become aware as to - when and where to say - "I don't know". I don't know can be very powerful, you know.

Simpson's paradox is a statistical phenomenon where a trend that appears in multiple groups of data reverses or disappears when the groups are combined.

A hospital runs a year-long study comparing two treatments for the same illness — Treatment A and Treatment B.

At first glance, the numbers are clear:

- Treatment A cured 60% of patients.

- Treatment B cured 70%.

Everyone rushes to conclude that Treatment B is superior. But one curious data scientist isn’t convinced. She says softly:

“I don’t know… something feels off. Let’s break it down.”

When they segment the data by severity of illness, the story flips:

- Among mild cases, Treatment A cured 90%, Treatment B only 85%.

- Among severe cases, Treatment A cured 30%, Treatment B only 25%.

So, Treatment A is better for both groups — but because more severe patients were given Treatment A, the overall average made it look worse.

When we don’t admit what we don’t know, averages can betray us. Sometimes the truth lives in the subgroups, not the summary.

How do we say - "I don't know"?

Its very simple. Think of a situation when you are on the receiving end. If you tend to get fixated answers from a person despite the nuances of situation and problem, how would your mind react? Would you not like the person to provide you with thoughts and be open to the idea of not knowing certain things? In fact, you would empathize with the person who accepts some of the unknowns and caveat out certain things. The same applies to data too - data can and cannot answer some or all of the questions. So if we are unsure about something, we should not shy away from accepting.

Some of the best ways to say I don't know:

- At this stage, the data doesn’t give us a clear answer — we might need to explore further.

- That’s an important point. I don’t have the answer right now, and I’d rather pause than guess.

- I’m not sure — maybe we can look at this together and see what the data tells us.

- That’s outside my current knowledge, but I know who might have the answer.

- Let me work on this - I will try my best to get you this answer. I do not know it at the moment.

Closing Thoughts

I would just close by saying - that data is a useful tool but if we mix if with human intelligence and judgement, the real magic is there. Real decisions are taken by a combination of the two. There exists a lot of things which data is not able to capture, and an intelligent data professional knows how to demarcate the knowns vs the unknowns. In fact, its a super useful skill. That skill can be used to decipher some of the amazing insights we never envisioned we would.

References