Hands on exercise - LangGraph

In the LLM world, LangGraph is something most of you might have heard of at least. In my last post, I explained the Lang series including LangChain, LangGraph and LangSmith.

LangGraph is an extensive version of LangChain and helps us create Agents. According to the textbook definition of LangGraph - "LangGraph, created by LangChain, is an open source AI agent framework designed to build, deploy and manage complex generative AI agent workflows."

Let me explain super simply - With "Large Language Models", it is very easy to hallucinate, or it is easy to not get desired responses. Also, when you think about automation and creating more autonomy, LLMs alone cannot do that. They need help. They need orchestration, the "so what" component, and "what do I do post this" component. That is why - we need agents, and to create agents, LangChain and LangGraph are the open source frameworks providing tools and libraries.

To explain the difference between LangChain, LangGraph and LangSmith, refer to my blog: https://analytics-with-anurag.com/what-are-langchain-langgraph-and-langsmith-simply-explained/

This blog will run through two sections: explaining the fundamental components of LangGaph, and then a hands on coding exercise in Python to create a simple agent.

Section 1: Components of LangGraph and why do they mean

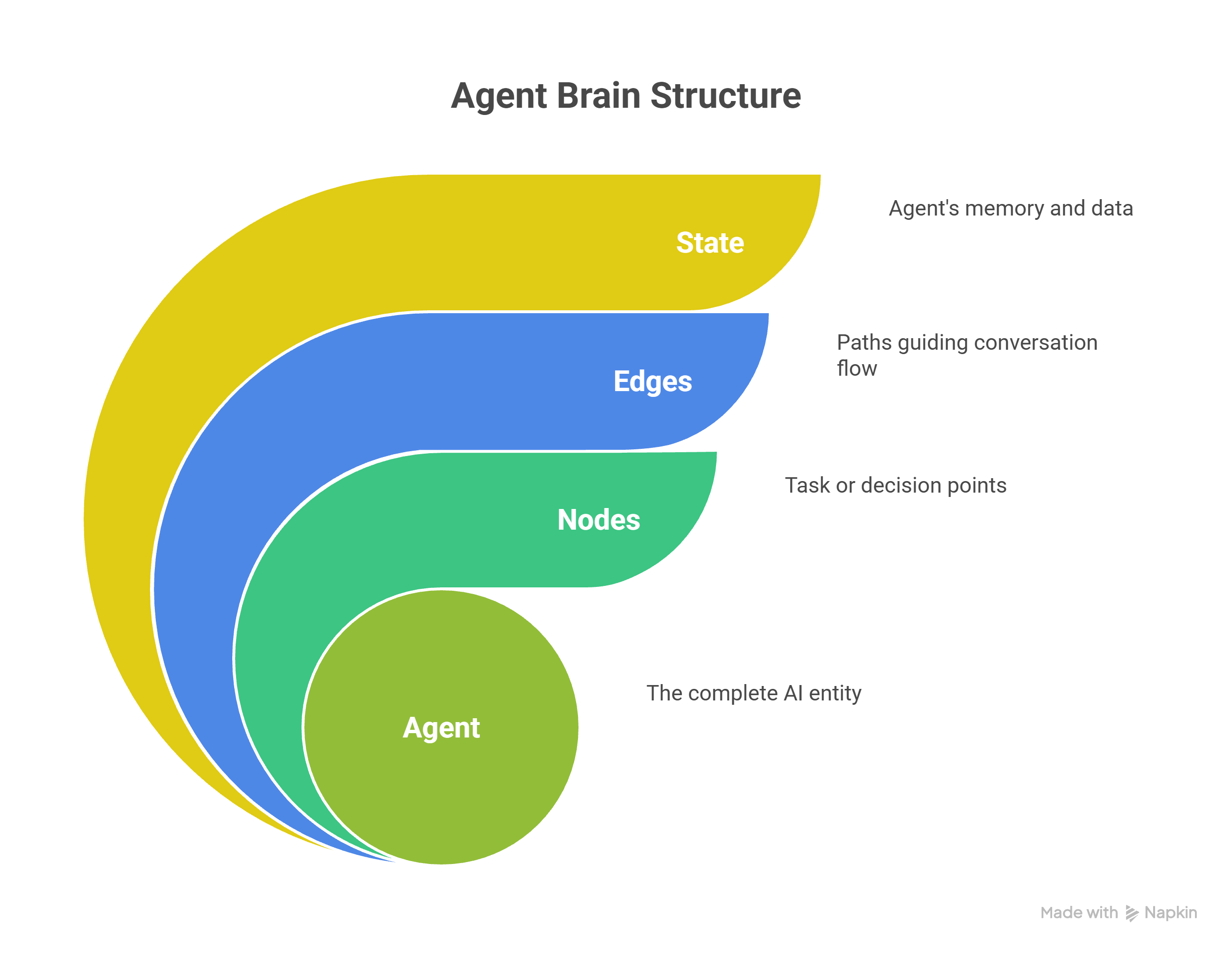

Nodes: nodes are the building blocks of your agent’s brain.

You can think of a node like a small task or decision point. When a user says something, the graph moves through these nodes one by one to figure out what to do.

Each node can do something like:

- Run a tool (e.g. generate a fantasy name)

- Ask the LLM for a response (e.g. write a poem)

- Decide where to go next (e.g. check if the user asked for a name or not)

Edges: edges are the paths between nodes — they tell the graph what to do next.

- After one node finishes, the edge decides which node runs next.

- Some edges are fixed (like always go from A to B).

- Others are conditional — they choose the next step based on the user’s input.

Think of it like:

Edges = arrows that guide the conversation flow between steps.

Conditional edges: A conditional edge is a smart path — it makes a decision about where to go next based on the conversation.

State:

Think of state as the agent’s notebook — it holds everything the agent knows at any moment.

- It's a dictionary of data (like messages or memory).

- It gets passed around between nodes in your graph.

- Each node can read from the state, add to it, or update it.

There can be two kinds of State: Message State and Memory State.

| Type | What it Stores | Use Case | Example |

|---|---|---|---|

| MessagesState | Only the chat messages so far | Great for short chats or tool calls | {"messages": [HumanMessage("Hi")]} |

| MemoryState | Messages + memory object (like ConversationBufferMemory) | Ideal for longer conversations that need context | {"messages": [...], "memory": ConversationBufferMemory(...)} |

That's it!

You are ready with the fundamentals, now lets delve into building your first agent using LangGraph.

Components of LangGraph

Section 2: Building your first LangGraph Agent using Python

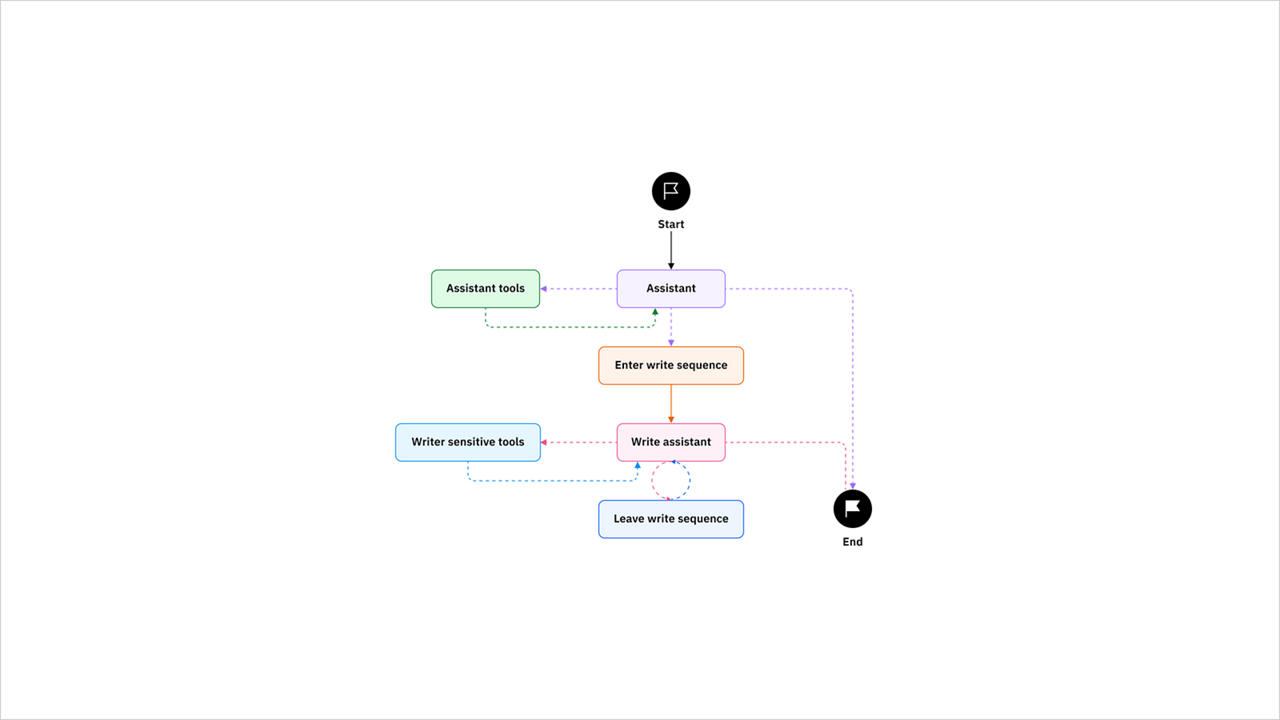

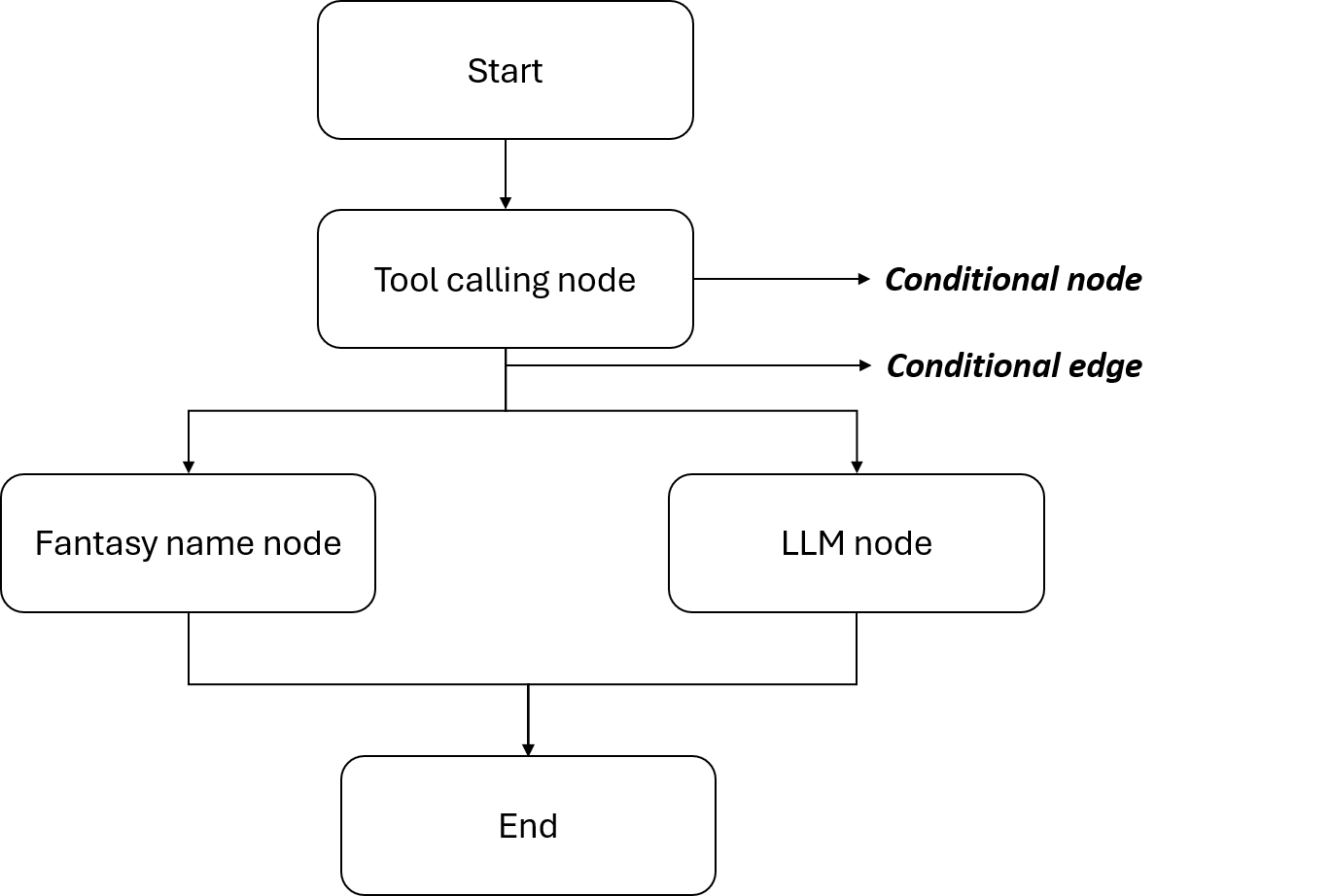

For this example, I wanted to create an agent which generates Fantasy names of the characters, based on a function. You can think of the function as a tool. If this question is not asked by the human/user, then the agent will let LLM answer the question user asked. For example: if I ask the agent to create a poem for me, then it will go to the LLM block and will create a poem for me. In short, the flow will look something like this:

User asks question -> Agent assesses if the question is related to Fantasy name -> If yes, the agent will go to the tool and give the fantasy name to the user, if not, LLM will answer the question

LLM model used: OpenAI's ChatGPT

IDE: Jupyter notebooks (you can very well use other IDEs such as Cursor.AI or Databricks notebooks)

Let's go...

Step 1: Import the libraries, and setup the environment

# install libraries

!pip uninstall -y pyodbc

!pip install pyodbc==4.0.39

!pip install --quiet -U langchain_openai langchain_core langgraph langgraph-prebuilt

%pip install -U langchain_openai langchain_core langgraph langgraph-prebuilt

# import required libraries

import langchain_openai

import langchain_core

import langgraph

from langchain_openai import ChatOpenAI

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

from langgraph.graph import MessagesState

from langgraph.prebuilt import ToolNode

from langgraph.prebuilt import tools_condition

from langchain_core.messages import HumanMessage

from langgraph.graph import StateGraph, START, END, MessagesState

# Setup the OpenAI API Key

import os

import getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"Enter your {var}: ")

# Prompt for API key if not already set

_set_env("OPENAI_API_KEY")

Once you run this code, it will prompt you to give your OpenAI key, and you can generate your test key here: https://platform.openai.com/api-keys

Step 2: Create a function for fantasy name which will act like a tool, and bind it to an LLM

# Setup your OpenAI LLM

llm = ChatOpenAI(model="gpt-4o")

# Create a function which will provide a fantasy name based on the species, else it will provide a random fantasy name

def generate_fantasy_name(species: str) -> str:

"""

Generate a fantasy name for a given species.

Args:

species (str): Species to generate name for.

Returns:

str: A fantasy name.

"""

import random

names = {

"orc": ["Grom", "Thrall", "Garrosh", "Krull"],

"elf": ["Legolas", "Elrond", "Galadriel", "Thranduil"],

"dwarf": ["Gimli", "Thorin", "Balin", "Dain"],

}

return random.choice(names.get(species.lower(), ["Zalor", "Xanoth", "Vorin"]))

# Bind the Tool with the LLM

llm_with_tools = llm.bind_tools([generate_fantasy_name])

# Create a node: this node uses the LLM with tool bindings to process the input messages.

def tool_calling_node(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

Step 3: Design a LangGraph Agent

# This code builds a LangGraph flow that routes user messages through either:

# 1. A tool-calling node (e.g., to generate a fantasy name using a tool),

# 2. Or a fallback LLM node (e.g., to answer general questions or generate poems),

# based on whether a tool is required, using conditional logic.

# Import Libraries

from langgraph.graph import StateGraph, START, END

from langgraph.prebuilt import ToolNode, tools_condition

import random

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage

from langgraph.graph import StateGraph, START, END, MessagesState

from langgraph.prebuilt import ToolNode, tools_condition

# Start building the agent through Message State

builder = StateGraph(MessagesState)

# Add a node that decides if tool call needed

builder.add_node("tool_calling", tool_calling_node)

# Add a node for tools (only fantasy name tool)

tools_node = ToolNode([generate_fantasy_name])

builder.add_node("tools", tools_node)

# Add a fallback LLM node for poem or other answers

def llm_only_node(state: MessagesState):

return {"messages": [llm.invoke(state["messages"])]}

builder.add_node("llm_only", llm_only_node)

# Start edge

builder.add_edge(START, "tool_calling")

# Conditional edges: if tool_calling node detects tool call → tools else → llm_only

def condition_func(state):

return "tools" if tools_condition(state) else "llm_only"

builder.add_conditional_edges("tool_calling", condition_func)

# Final edges to end

builder.add_edge("tools", END)

builder.add_edge("llm_only", END)

graph = builder.compile()

Step 4: Invoke the agent and ask questions

# This function sends the user's input to the graph, invokes the appropriate node

# (either a tool or LLM), and prints the assistant's response.

# It handles both standard messages and those without a pretty_print method.

from langchain_core.messages import HumanMessage

def run_and_print(user_input: str):

messages = [HumanMessage(content=user_input)]

output = graph.invoke({"messages": messages})

for msg in output["messages"]:

try:

msg.pretty_print()

except AttributeError:

print(f"{getattr(msg, 'role', 'unknown')}: {getattr(msg, 'content', str(msg))}")

See the output..

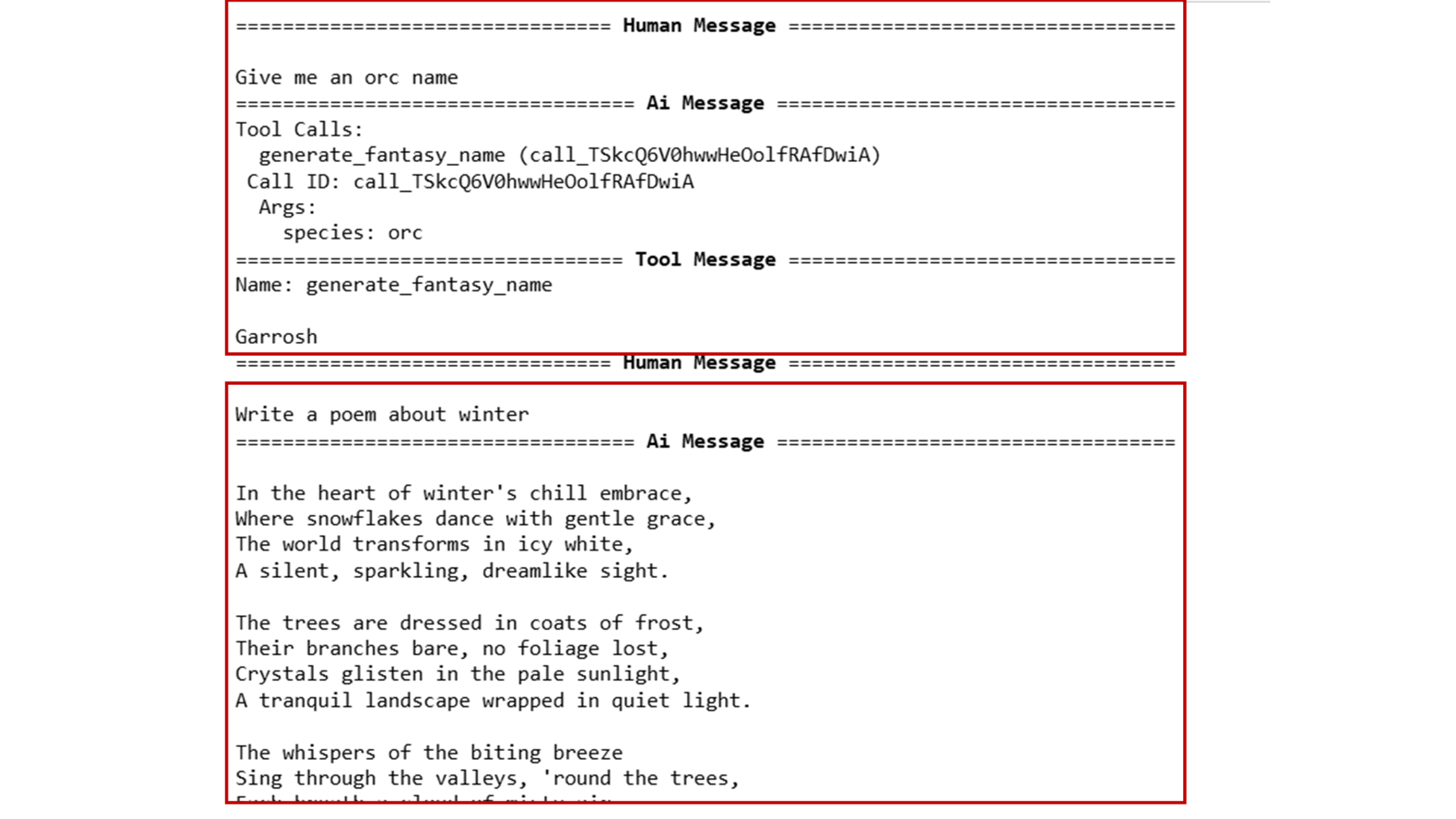

run_and_print("Give me an orc name") # calls generate_fantasy_name tool

run_and_print("Write a poem about winter") # calls fallback llm_only node, generates poem or answer via LLM

When we asked the orc name, it called the function for creating fantasy names. When we just asked something else, LLM detected that it needs to generate the response based on ChatGPT.

Lets take this one step ahead: let us add memory so the State of the agent remembers what we are talking about + lets also create a loop of conversations, so its not a one-dimensional conversation.

# Import components for managing conversation memory and building conversational chains.

# - ConversationBufferMemory: stores past messages to maintain context.

# - ChatOpenAI: the chat-based LLM interface.

# - ConversationChain: a simple chain that combines the LLM with memory.

from langchain.memory import ConversationBufferMemory

from langchain.chat_models import ChatOpenAI

from langchain.chains import ConversationChain

# Chat model setup with memory

# - Initializes a ChatOpenAI model with deterministic output (temperature=0)

# - Sets up a ConversationBufferMemory to store and return all previous messages

llm = ChatOpenAI(temperature=0)

memory = ConversationBufferMemory(return_messages=True)

# Wrap the LLM with memory in a ConversationChain

# This allows the chatbot to remember and reference past messages during the conversation

conversation_chain = ConversationChain(llm=llm, memory=memory)Result....

References:

https://academy.langchain.com/courses/intro-to-langgraph