Connect the dots for Generative AI: where it all got started?

GenAI, ML

With Generative AI taking over people's lives and rather I should say lives across technical and non-technical people, delving deep into the journey and evolution of AI becomes significant. Not that AI is something new, it has been our lives through decades, but there are typical reasons as to why it emerged as omnipresent in today's world. I know the first answer that comes to our mind seems to be - ChatGPT. Maybe it is true but at the same time, it is more important to understand the core of it. In this article, lets go through the journey of AI in a very fundamental way - lets understand how Large Language Models work and how are they different from generic machine learning models.

Section 1: The core of it: you know it more than you know you know it!

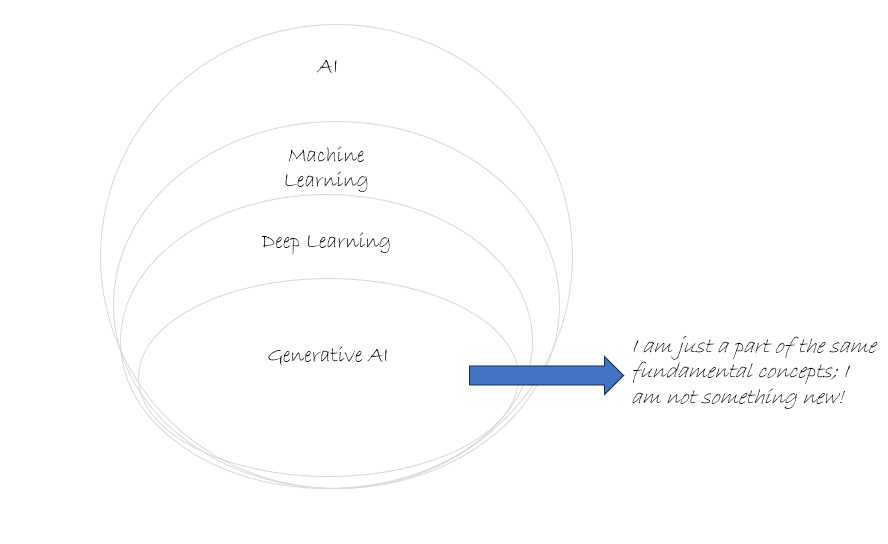

What is AI - how is LLM typically connected to AI? What about deep learning, does it have something to do with LLMs? Well, let's be honest - with the world talking about it, we typically forget about the core fundamentals of it. Lets understand LLMs by connecting and reconnecting the dots. Simply put, its all connected. Don't get overwhelmed by the terminologies because the basis of everything is fundamentally linked somehow. Let me make it simple just so it can be engrained in you without much hassle.

Artificial Intelligence -> Machine Learning + Deep Learning

Machine Learning -> Supervised Machine Learning + Unsupervised Machine Learning + Semi-supervised machine learning

Deep Learning -> Discriminative deep learning + Generative deep learning

Deep Learning -> Basis of Generative AI

Well, let me explain the above equations simply.

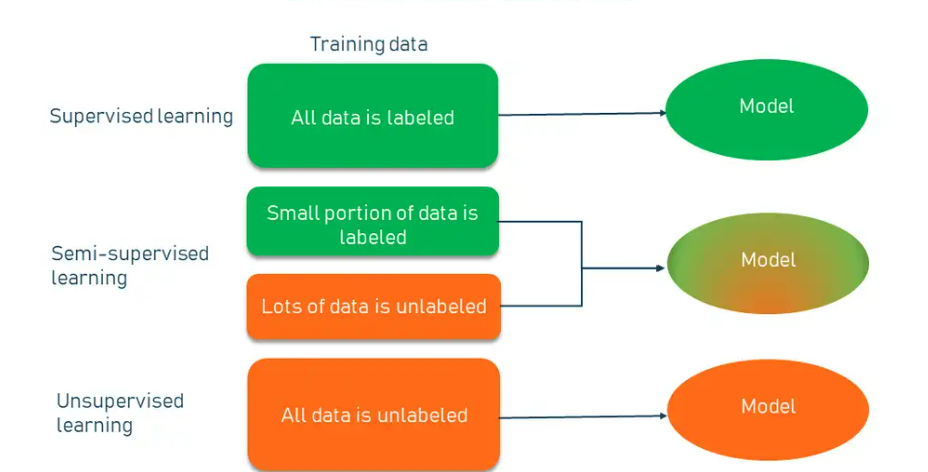

Artificial intelligence is the holistic segment that includes machine learning as well as deep learning. Machine learning is made up of supervised learning, which is done based on labelled data (for example: regression modelling and classification modelling) and unsupervised learning (for ex: clustering). Now, deep learning is linked to neural networks, which are a combination of one or more layers of neurons and can be tasked to perform supervised learning as well as unsupervised learning. Neural networks = this is where it all started!!! Let me explain this even more simply through the diagram below:

Now, even deep learning can be either discriminative or generative. Discriminative learning is typically done based on labelled datasets and used for classification algorithms (as the name suggests).

Lets finally come to Generative AI which is a class within deep learning. Generative AI is the type of AI which generates new content (such as text, code, images and videos) based on what it had learnt from the prior training. Generative models are typically semi-supervised, i.e., they are trained based on some labelled data, but are also fed a huge amount of unlabeled data to produce outcomes. ChatGPT (or GPT 3.5 model released by OpenAI) is a form of a GenAI model but it is not the only GenAI model out there. ChatGPT was made public by OpenAI in November 2022, and you know the rest of the story. Lets move to the next section.

Section 2: Different types of generative models

There are many kinds of Generative AI models - Let me explain in very brief without getting into the technical detail.

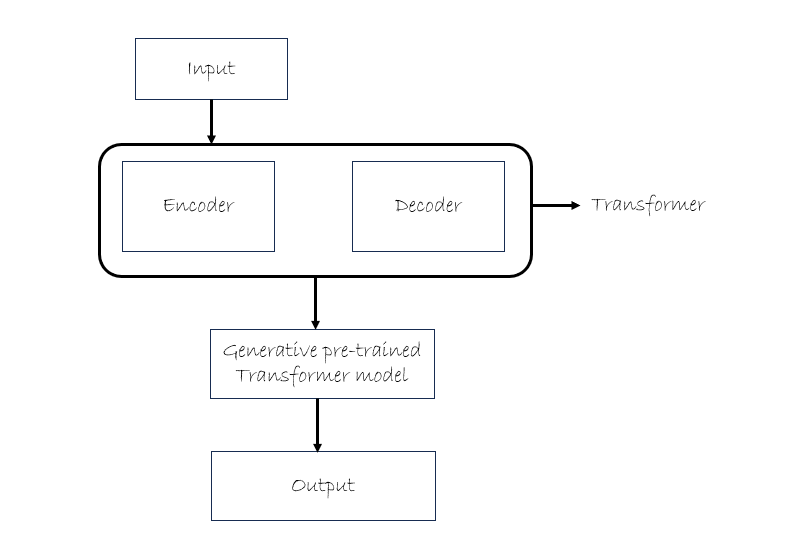

1) Variational Autoencoder - Variational autoencoders are based on encoder-decoder concept. The encoder takes the input, which can be both, labelled or unlabeled data and encodes it into dense information. The decoder takes the encoding (from the output of the encoder) and then reconstruct the information from the decoder. Together, they form auto-encoder. The variational segment provides "generative" capabilities to the model to generate completely new content. One of the most unique capabilities of VAEs are classification (labelling) of a new image. For ex: if you provide an input of an image of cat, the VAE can help it classify as "cat". The fundamentals are the same - neural networks. Both, the encoder and decoder are based on neural networks.

2) Generative Adversarial Network - The birth of GANs took place in around 2014 for producing new images (the generative component) that resembled the other images (training component of the model). This type of GenAI model has two main segments: Generator and Discriminator. Generator generates new content, and discriminator figures out whether the output of generator is plausible or not. They both work in tandem to generate "new plausible" content (hence Generative AI).

There are several applications of GANs - from image recognition to natural language processing, generating photos based on text, text to image translation, etc. In fact, a lot of AI startups which generate new images have their backend modelling based on GANs.

3) GPT models - GPT models or Generative Pre-Trained Transformer models work on the transformer neural network mechanism. The transformer is a component which contains encoder and decoder neural networks. The model is fed with vast amount of unlabeled data typically from online data sources such as text, videos, books, websites, etc. That is where the word transformer gets its interpretation from - lots of GPUs are used to train the model using the web, so that the model has context from the the vast space to predict the outcome. For more technical context, this is based on semi-supervised machine learning. Lets now understand this term semi-supervised learning.

Supervised learning <- labelled data

Unsupervised learning <- unlabeled data

Semi-supervised learning <- Unsupervised learning + model provides the labels to the content itself (self-training).

OpenAI's ChatGPT is based on the GPT methodology. Post launching GPT 1, 2 and 3 models, OpenAI successfully launched state of the art GPT 3.5 and then subsequently GPT-4. The difference between the two is that GPT 3.5 is a completion style model that essentially tries to complete the sentences based on the prompt (through probability distributions), whereas, GPT-4 is more conversation style model which tries to enhance human interaction by gauging the "context" behind the conversation.

To summarize, if you were to remember the working of GPT model simply, remember the below image:

Section 3: What then is a Large Language Model?

Lets break down the components of this term LLM. LLM = L + LM.

L = Large. LM = Language model.

A language model simply put - predicts the next word, token or sentence for the sentence or phrase, based on the context. This happens through the power of probability distributions. The word or sentence with highest probability is the output of a language model. Language model can be trained simply or with a high amount of complexity. A language model might or might not be made up a neural network.

The word large simply means a lot of text, data and words. To enable the completion of sentences, tokens and phrases, powerful neural networks are trained on vast amount of text so it can answer queries, complete sentences and in short, can imitate a human-like response. Hence LLMs are super powerful. Large Language models are built using powerful models that consist of neural networks.

Then what is the difference between LLMs and Generative AI?

Well, ChatGPT is an LLM but it is not the only LLM out there. There are many different kinds of LLMs and are built using same fundamentals but are trained, fine tuned, reinforced differently to each other. Some of the examples include:

A) BERT - Google's BERT is a transformer based model that can answer queries just like GPT

B) GPT - GPT models are probably the best kind out there currently. Developed by OpenAI and has many different versions (GPT 3, GPT 3.5, GPT 4.0, etc.) Not only it is available to public as ChatGPT, but it can also be used to develop a fine tuned model for a specific purpose within an organization. For instance, it can be deployed in a pharmaceutical organization and trained based on clinical information to create a specific purpose model and deployed within the cloud environment of the organization. We will see such deployments happening across the companies a lot more in the coming decade.

C) PaLM - Google's Pathways Language Model is a transformer language model capable of common-sense and arithmetic reasoning, explanation of jokes, generation of codes, etc.

A lot more...

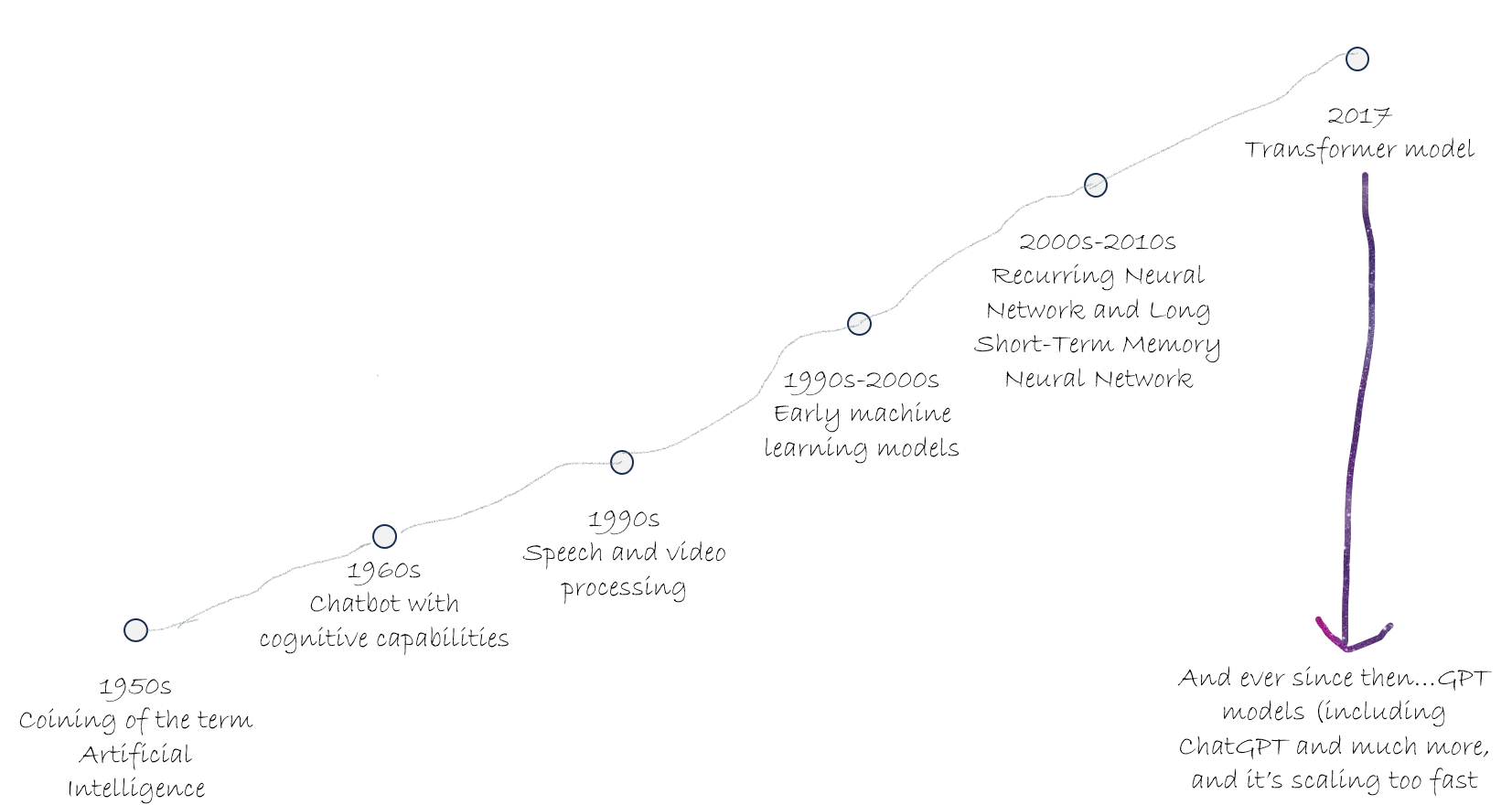

Section 4: The evolution

Well, AI goes way back. The adoption of AI has been rising since decades. It has come into everyone's eyes recently when ChatGPT's adoption went crazy (100M users in record breaking time). AI's evolution has been super granular with a lot of developments over time. I have tried to summarize some bits in below visual.

AI has been rising and rising and it seems like there is no full stop. To the extent, that there have been concerns about its uptake and overpowering on the humans. There have been theories, publications, remarks and comments. The truth is.. no one knows how AI will revolutionize the way we live, work and probably function. However, there have been disruptions and there will be more.

According to bubble theory in economics, rapid rise in market prices will be followed by a sudden crash as investors move out of overvalued assets with little or no clear indicators for the timing of the event. AI is no asset as such, however, it is becoming an ideation, a curiosity, a term that is spreading more than the power of term itself.

Section 4: The Summary

Well, you will find a lot of technical stuff out there - and you can do courses on Coursera and Udemy to learn to code an LLM or a Generative AI model. My objective through this blog was to provide you with a quick fundamental walk through the line of GenAI.

So, to summarize.... drum roll:

Generative AI falls within the class of deep learning and neural networks; the core concept is to mimic the human brain. GenAI generates new content based on what it has "learnt before". Making the models learn is where the entire complexity and competition arises - huge amount of training data is required for closing the gap between the output of model and what is expected out of it.

References:

https://nettricegaskins.medium.com/the-transformative-factor-genai-fair-use-fac436e60a42

https://app.dealroom.co/lists/33530?ref=gptechblog.com

https://www.linkedin.com/learning/artificial-intelligence-foundations-thinking-machines

https://www.linkedin.com/learning/introduction-to-prompt-engineering-for-generative-ai

https://www.linkedin.com/learning/actionable-insights-and-business-data-in-practice

What is generative AI and how does it work? – The Turing Lectures with Mirella Lapata

Generative AI: what is it good for?

What are Generative AI models?